Cloud-Optimized Geospatial Formats Overview

These slides are a summarization of Cloud-Optimized Geospatial Formats Guide to support presentations.

Cloud-Optimized Geospatial Formats Overview

Google Slides version of this content: Cloud-Optimized Geospatial Formats.

What Makes Cloud-Optimized Challenging?

- There is no one size fits all approach.

- Earth observation data may be processed into raster, vector and point cloud data types and stored in a long list of data formats and structures.

- Optimization depends on the user.

- Users must learn new tools and which data is accessed and how may differ depending on the user.

- … hopefully only a few new methods and concepts are necessary.

What Makes Cloud-optimized Challenging?

What Makes Cloud-optimized Challenging?

There is no one-size-fits-all packaging for data, as the optimal packaging is highly use-case dependent.

Task 51 - Cloud-Optimized Format Study

Authors: Chris Durbin, Patrick Quinn, Dana Shum

What Does Cloud-Optimized Mean?

File formats are read-oriented to support:

- Partial reads

- Parallel reads

What Does Cloud-Optimized Mean?

- File metadata in one read

- When accessing data over the internet, such as when data is in cloud storage, latency is high when compared with local storage so it is preferable to fetch lots of data in fewer reads. The preceding requirement of fetching all metadata in one read means that data reads can happen concurrently.

- An easy win is metadata in one read, which can be used to read a cloud-native dataset.

- A cloud-native dataset is one with small addressable chunks via files, internal tiles, or both.

What Does Cloud-Optimized Mean?

- Accessible over HTTP using range requests.

- This makes it compatible with object storage (a file storage alternative to local disk) and thus accessible via HTTP, from many compute instances.

- Supports lazy access and intelligent subsetting.

- Integrates with high-level analysis libraries and distributed frameworks.

image credit: Ryan Abernathey

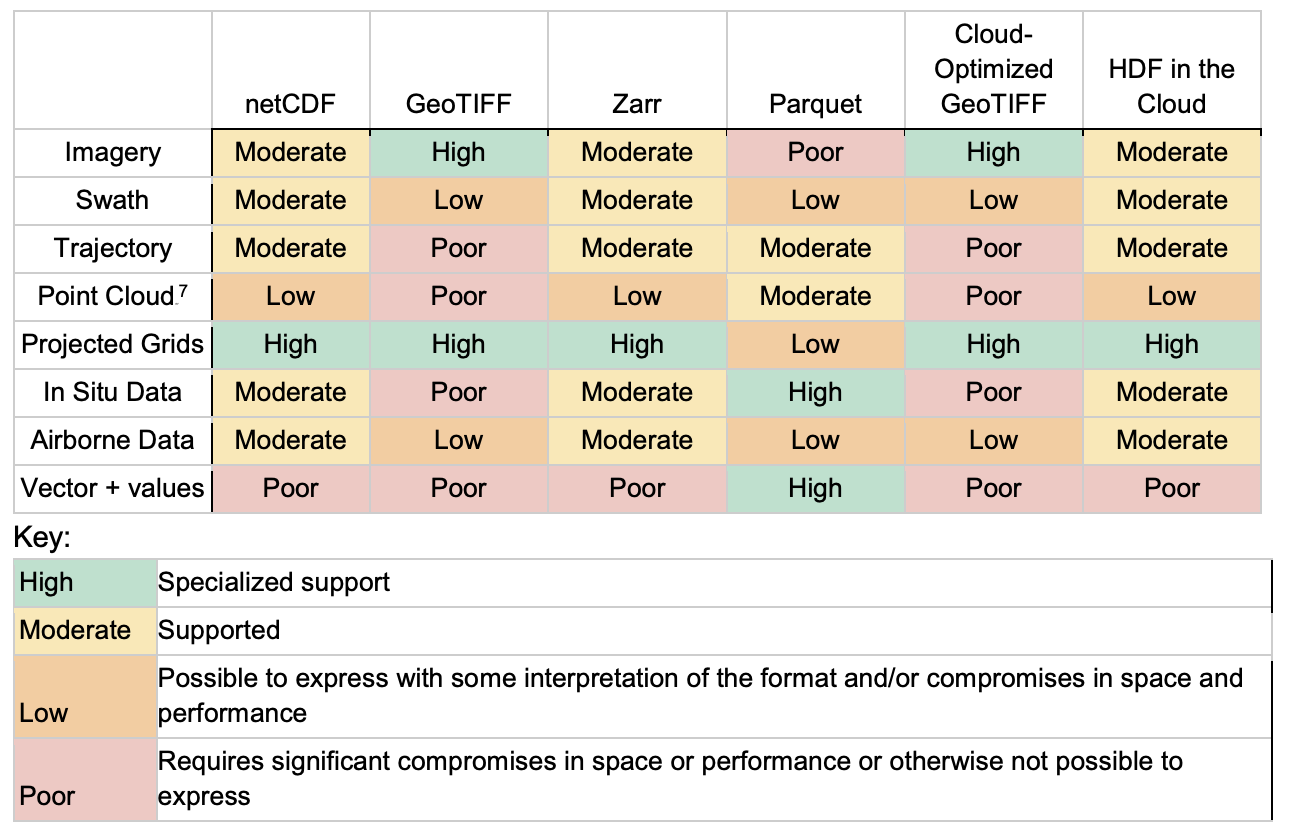

Formats by Data Type

| Format | Data Type | Standard Status |

|---|---|---|

| Cloud-Optimized GeoTIFF (COG) | Raster | OGC standard for comment |

| Zarr, Kerchunk, Icechunk | Multi-dimensional raster | ESDIS and OGC standards in development |

| Cloud-Optimized Point Cloud (COPC), Entwine Point Tiles (EPT) | Point Clouds* | no known ESDIS or OGC standard |

| FlatGeobuf, GeoParquet, | Vector | no known ESDIS, draft OGC standard |

Formats by Adoption

| Format | Adoption | Standard Status |

|---|---|---|

| Cloud-Optimized GeoTIFF (COG) | Widely adopted | OGC standard for comment |

| Zarr, Kerchunk, Icechunk | (Less) widely adopted, especially in specific communities | ESDIS and OGC standards in development |

| Entwine Point Tiles (EPT), Cloud-Optimized Point Cloud (COPC) | Less common (PDAL Supported) | no known ESDIS or OGC standard |

| GeoParquet, FlatGeobuf | Less common (OGR Supported) | no known ESDIS, draft OGC standard |

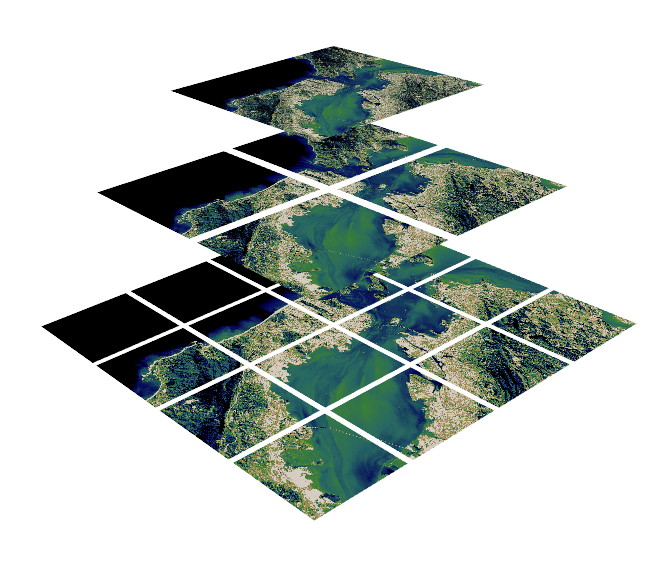

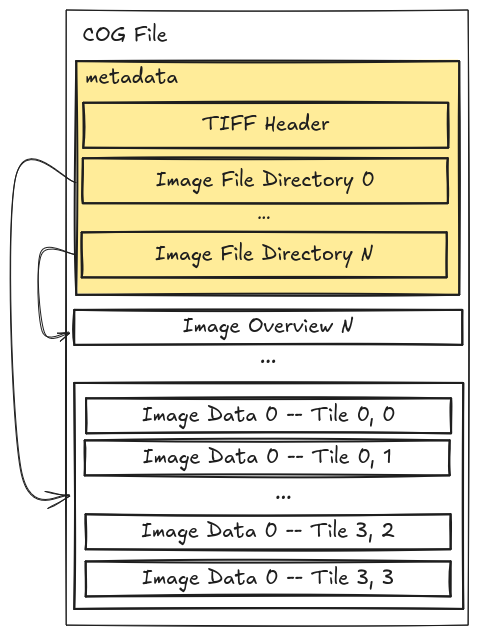

What are COGs?

- COGs are raster data representing a snapshot in time of gridded data, for example digital elevation models (DEMs).

- COGs are a de facto standard, with an Open Geospatial Consortium (OGC) standard under review.

- The standard specifies conformance to how the GeoTIFF is formatted, with additional requirements of tiling and overviews.

image source: https://www.kitware.com/deciphering-cloud-optimized-geotiffs/

What are COGs?

- COGs have internal file directories (IFDs) which are used to tell clients where to find different overview levels and data within the file.

- Clients can use this metadata to read only the data they need to visualize or calculate.

- This internal organization is friendly for consumption by clients issuing HTTP GET range request (“bytes: start_offset-end_offset” HTTP header)

image source: https://medium.com/devseed/cog-talk-part-1-whats-new-941facbcd3d1

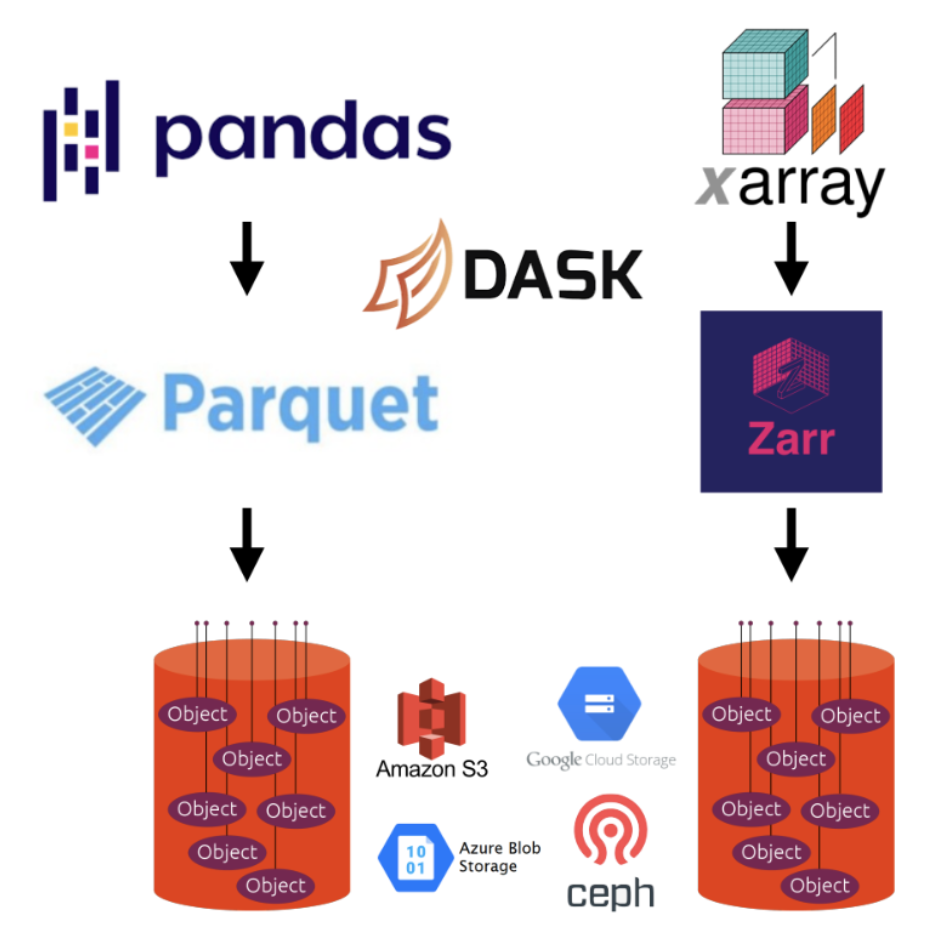

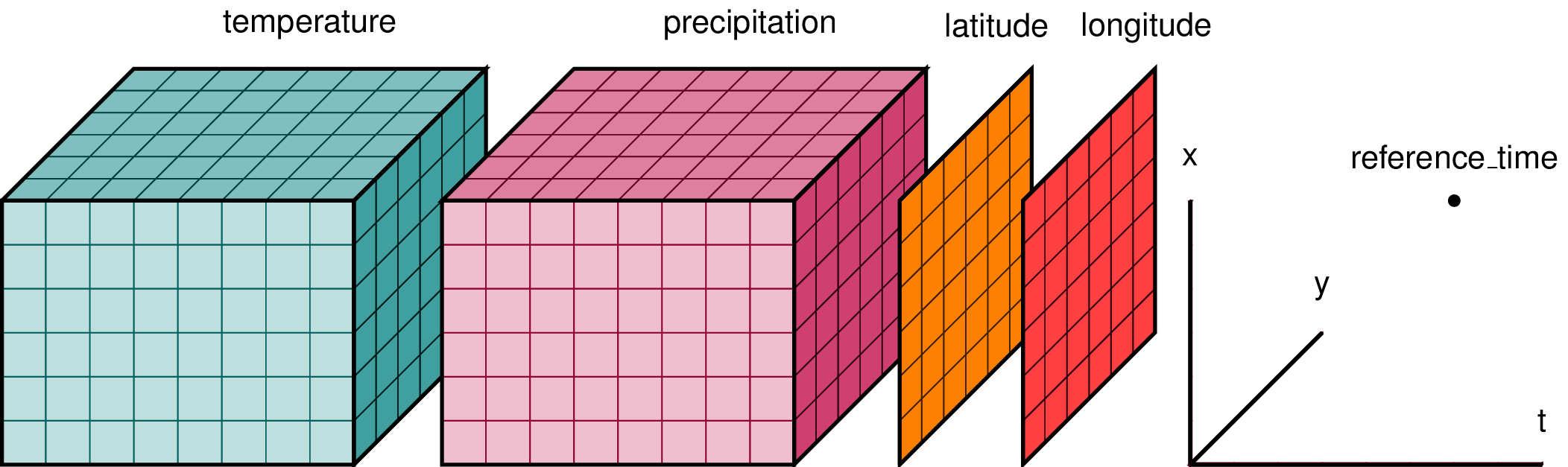

What is Zarr?

- Zarr is used to represent multidimensional raster data or “data cubes”. For example, weather data and climate models.

- Chunked, compressed, N-dimensional arrays.

- The metadata is stored external to the data files themselves. The data itself is often reorganized and compressed into many files which can be accessed according to which chunks the user is interested in.

image source: https://xarray.dev/

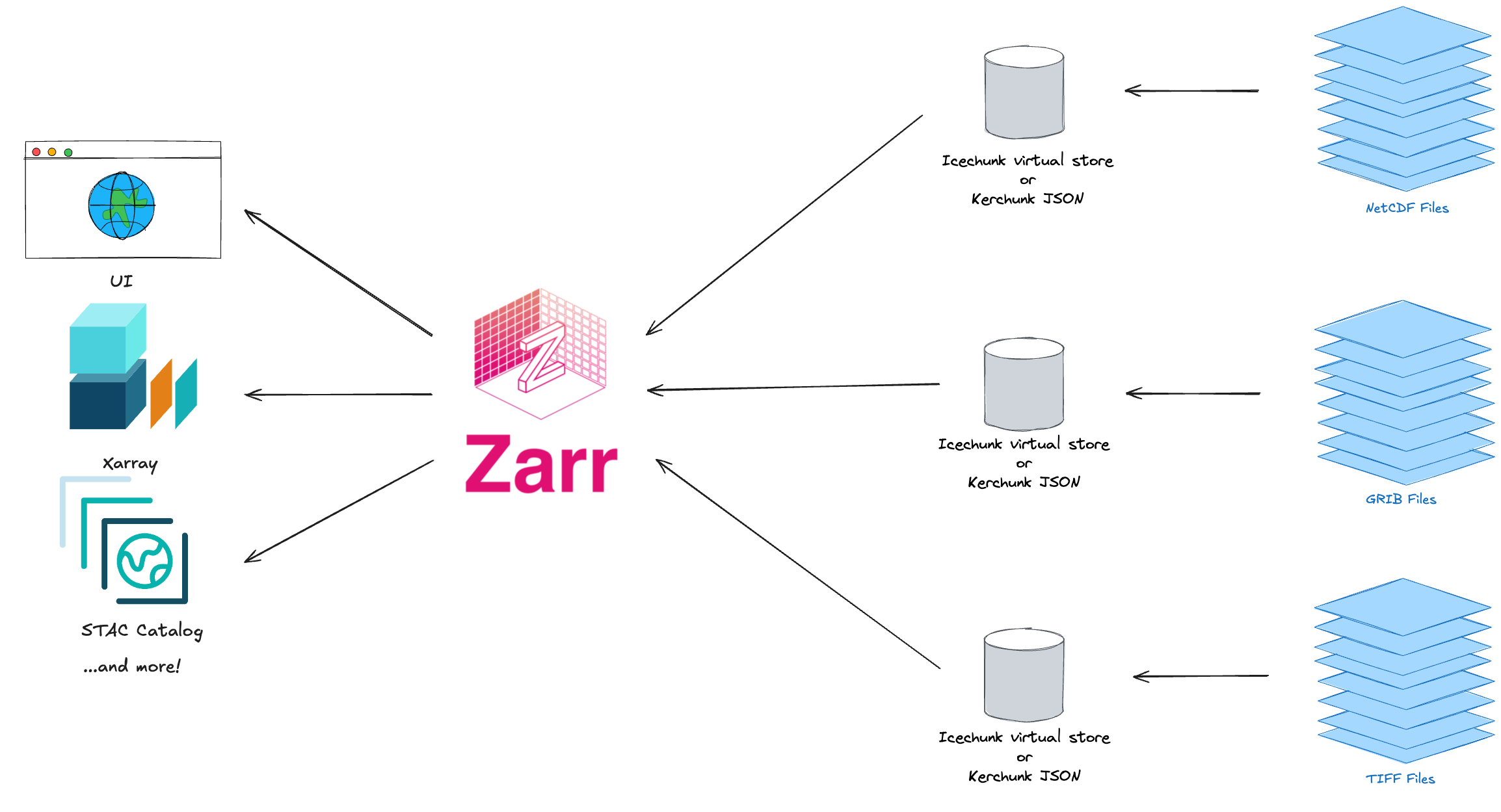

What is Virtual Zarr?

- Virtual Zarr stores include metadata along with references to data in archival file formats, such that you can leverage the benefits of partial and parallel reads for archives in NetCDF4, HDF5, GRIB2, TIFF and FITS. Kerchunk and Icechunk provide ways to persist Virtual Zarr stores on disk or in object stores.

Zarr Specs in Development

- V2 is widely adopted. Zarr V3 was recently released.

- Zarr V3 provides additional extension mechanisms and sharding, which allows high concurrency while minimizing the number of files.

- Zarr Python 3 supports reading and writing to Zarr format V3 while still supporting Zarr V2, with dramatic performance improvements.

- Brianna Pagán (formerly NASA, currently Development Seed) has organized the OGC GeoZarr standards working group (SWG) to establish standards for geospatial metadata. The SWG includes representatives from many other orgs in the industry.

- The GeoZarr spec defines conventions for organizing geospatial data in a Zarr store. Specifically, the spec defines conventions for Zarr DataArray and DataSet metadata and organization of associated data to be conformant as GeoZarr archive. The spec remains in development.

- Icechunk provides features including data version control and serializable isolation on top of Zarr. Icechunk is still under rapid development and is moving towards a v1.0 release.

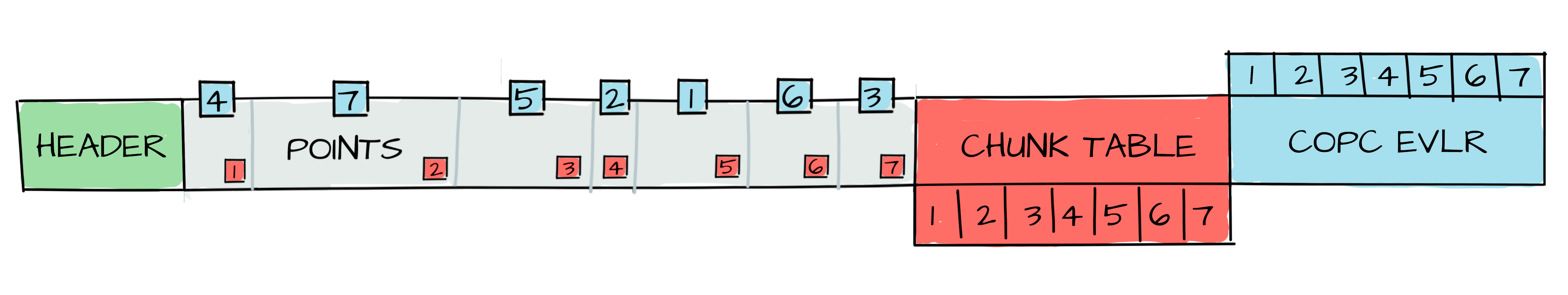

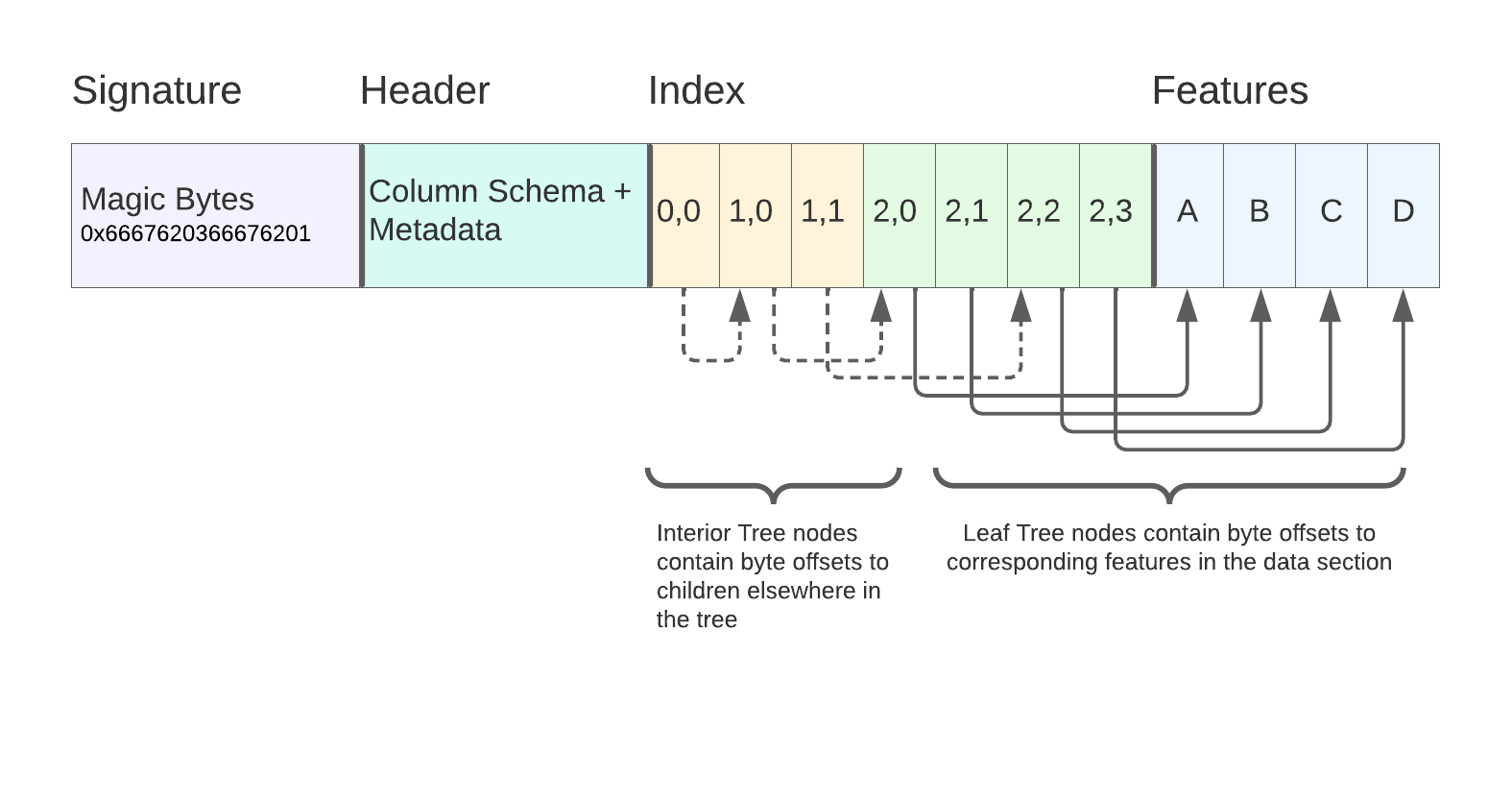

COPC (Cloud-Optimized Point Clouds)

image source: https://copc.io/

- Point clouds are a set of data points in space, such as gathered from LiDAR measurements.

- COPC is a valid LAZ file.

- Similar to COGs but for point clouds: COPC is just one file, but data is reorganized into a clustered octree instead of regularly gridded overviews.

- 2 key features:

- Support for partial decompression via storage of data in a series of chunks.

- Variable-length records (VLRs) can store application-specific metadata of any kind. VLRs describe the octree structure.

- Limitation: Not all attribute types are compatible.

FlatGeoBuf

- Vector data is traditionally stored as rows representing points, lines, or polygons with an attribute table.

- FlatGeobuf is a binary encoding format for geographic data. Flatbuffers that hold a collection of Simple Features. Single-File.

- A row-based streamable-spatial index optimizes for remote reading.

- Developed with OGR compatibility in mind. Works with existing OGR APIs, e.g. python and R.

- Works with HTTP range requests, and has CDN compatibility.

- Limitation: Not compressed specifically to allow random reads.

- Learn more: https://github.com/flatgeobuf/flatgeobuf, Kicking the Tires: Flatgeobuf

image source: https://worace.works/2022/02/23/kicking-the-tires-flatgeobuf/

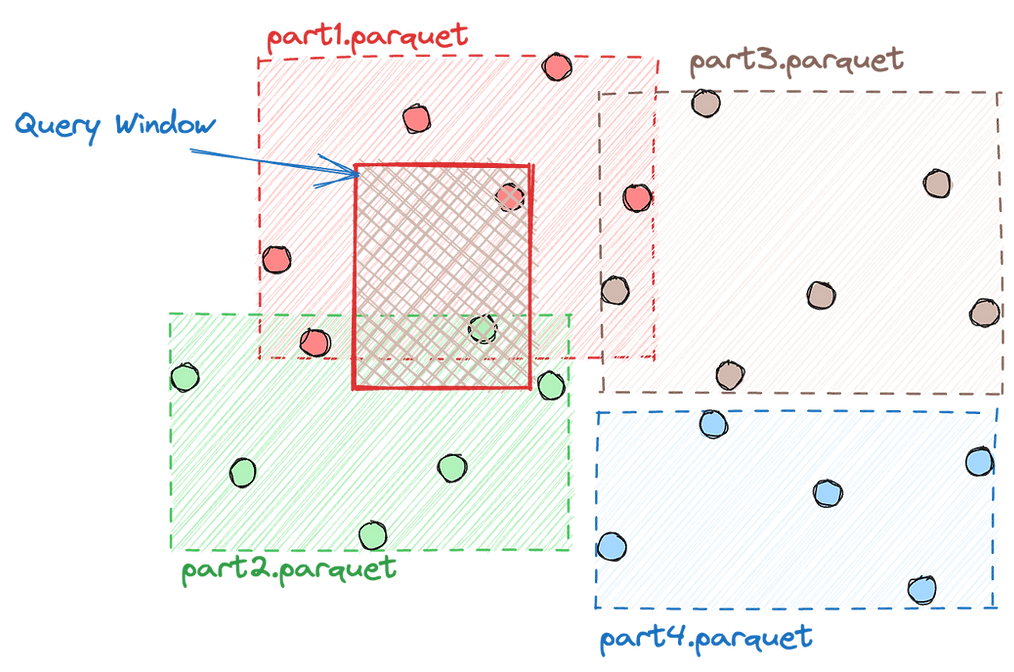

Geoparquet

- Vector data is traditionally stored as rows representing points, lines, or polygons with an attribute table.

- GeoParquet defines how to store vector data in Apache Parquet, which is a columnar storage format (like many cloud data warehouses). “Give me all points with height greater than 10m”.

- GeoParquet is highly compressed.

- GeoParquet can be stored in a single- or multi-file store.

- GeoParquet support exists in GeoPandas and is supported in R with GeoArrow.

- GeoArrow provides the potential for cross language in-memory shared access.

- Specifications for spatial-indexing, projection handling, etc. are still in discussion.

- Learn more: https://github.com/opengeospatial/geoparquet

image source: https://www.wherobots.ai/post/spatial-data-parquet-and-apache-sedona

The End?

Not Quite

- These formats and their tooling are in active development.

- Some formats were not mentioned, such as EPT, geopkg, tiledb, Cloud-Optimized HDF5. This presentation was scoped to those known best by the authors.

- This site will continue to be updated with new content.

References

Prior presentations and studies discussing multiple formats

- Cloud Optimized Data Formats Task 51 Study presentation slides

- COG and Zarr for Geospatial Data - draft white paper by Vincent and Ryan

- Guide for Generating and Using Cloud Optimized GeoTIFFs

- What is Analysis Ready Cloud Optimized data and Why Does it Matter? for NOAA EDMW Sept 15, 2022 - previous presentation on this topic

- Brianna-ESIP-2022.pptx - previous presentation on this topic

- An Exploration of ‘Cloud-Native Vector’ | by Chris Holmes

Format Homepages and Explainers

- https://flatgeobuf.org/ - links to some example notebooks and provides the specification

- HDF in the Cloud challenges and solutions for scientific data - Matthew Rocklin’s discussion about HDF in the cloud

- https://copc.io/ - Cloud-Optimized Point Cloud home page + explainer

- Cloud Native Geospatial Lidar with the Cloud Optimized Point Cloud - LIDAR Magazine - Howard Butler explains COPC in lidarmag

- Kicking the Tires: Flatgeobuf - Flatgeobuf Blog by Horace Williams